Difference between revisions of "Choosing a processor for a build farm"

m (→Introducing a new player - MiQi board - 2016/07/26) |

m (→Devices) |

||

| Line 91: | Line 91: | ||

| 2016/05/21 || NanoPC-T3 || armv8/cortex A53 || Samsung S5P6818 || 1.4/1.4 GHz || 8 || 8 || 1 GB DDR3 || 32 || 1600 | | 2016/05/21 || NanoPC-T3 || armv8/cortex A53 || Samsung S5P6818 || 1.4/1.4 GHz || 8 || 8 || 1 GB DDR3 || 32 || 1600 | ||

|- | |- | ||

| − | | 2016/07/26 || mqmaker MiQi || armv7/cortex A17 || RK3288 || 1.608/1.608 GHz'''*''' || 4 || 4 || 2 GB DDR3 || 64 || 1056 | + | | 2016/07/26 || mqmaker MiQi || armv7/cortex A17 || RK3288 || 1.608/1.608 GHz'''****''' || 4 || 4 || 2 GB DDR3 || 64 || 1056 |

|} | |} | ||

| − | '''* | + | '''* : the device claims to run at 1.8 GHz but the kernel silently ignores frequencies above 1.608 GHz'''!<br/> |

| − | '''** : the device doesn't let you configure this frequency despite being sold as a 2.0 GHz device'''!<br/> | + | '''** : the device doesn't let you configure this frequency despite being sold as a 2.0 GHz device'''!<br/> |

| − | '''***: the device claims to run at 2.016 GHz but the kernel silently ignores frequencies above 1.536 GHz'''!<br/> | + | '''*** : the device claims to run at 2.016 GHz but the kernel silently ignores frequencies above 1.536 GHz'''!<br/> |

| + | '''****: the device used to have the same problem but is now fixed upstream thanks to the vendor's reactivity'''!<br/> | ||

=== Results === | === Results === | ||

Revision as of 23:17, 26 July 2016

Contents

- 1 Overview

- 2 Hardware considerations

- 3 Methodology

- 4 Porting a compiler to another machine

- 5 Tests

- 5.1 Conditions

- 5.2 Test method

- 5.3 Metrics

- 5.4 Devices

- 5.5 Results

- 5.6 Analysis on 2014/08/05

- 5.7 Analysis on 2015/01/18

- 5.8 Analysis on 2016/02/07

- 5.9 New tests with distcc on the Linux kernel on 2016/02/07

- 5.10 Hardware that deserves being studied - 2016/02/20

- 5.11 Tests conducted on FriendlyARM platforms - 2016/05/21

- 5.12 A new RK3288 board to come : the MiQi board - 2016/07/07

- 5.13 Very interesting discoveries about cheating vendors - 2016/07/09

- 5.14 Introducing the BogoLocs metric - 2016/07/09

- 6 And now the BogoLocs

Overview

Build farms are network clusters of nodes with high CPU performance which are dedicated to build software. The general approach consists in running tools like "distcc" on the developer's workstation, which will delegate the job of compiling to all available nodes. The CPU architecture is irrelevant here since cross-compilation for any platform is involved anyway.

Build farms are only interesting if they can build faster than any commonly available, cheaper solution, starting with the developer's workstation. Note that developers workstations are commonly very powerful, so in order to provide any benefit, a cluster aiming at being faster than this workstation still needs to be affordable.

In terms of compilation performance, the metrics are lines-of-code per second per dollar (performance) and lines of code per joule (efficiency). A number of measurements were run on various hardware, and this research is still going. To sum up observations, most interesting solutions are in the middle range. Too cheap devices have too small CPUs or RAM bandwidth, and too expensive devices optimise for areas irrelevant to build speed, or have a pricing model that exponentially follows performance.

Hardware considerations

Nowadays, most processors are optimised for higher graphics performance. Unfortunately, it's still not possible to run GCC on the GPU. And we're wasting transistors, space, power and thermal budget in a part that is totally unused in a build farm. Similarly, we don't need a floating point unit in a build farm. In fact, if some code to be built uses a lot of floating point operations, the compiler will have to use floating point as well to deal with constants and some optimisations, but such code is often marginal in a whole program, let alone distribution (except maybe in HPC environments).

Thus, any CPU with many cores at a high frequency and high memory bandwidth may be eligible for testing, even if there's neither FPU nor GPU.

Methodology

First, software changes a lot. This implies that comparing numbers between machines is not always easy. It could be possible to insist on building an outdated piece of code with an outdated compiler, but that would be pointless. Better build modern code that the developer needs to build right now, with the compiler he wants to use. As a consequence, a single benchmark is useless, it always needs to be compared to one run on another system with the same compiler and code. After all, the purpose of building a build farm is to offload the developer's system so it makes sense to use this system as a reference and compare the same version of toolchain on the device being evaluated.

Porting a compiler to another machine

The most common operation here is what is called a Canadian build. It consists in building on machine A a compiler aimed at running on machine B to produce code for machine C. For example a developer using an x86-64 system could build an ARMv7 compiler producing code for a MIPS platform. Canadian builds sometimes fail because of bugs in the compiler's build system which sometimes mixes variables between build, host or target. For its defense, the principle is complex and detecting unwanted sharing there is even more difficult than detecting similar issues in more common cross-compilation operations.

In case of failure, it can be easier to proceed in two steps :

- canadian build from developer's system to tested device for tested device. This results in a compiler that runs natively on the test device.

- native build on the test device of a cross-compiler for the target system using the previously built compiler.

Since that's a somewhat painful (or at least annoying) task, it makes sense to back up resulting compilers and to simply recopy it to future devices to be tested if they use the same architecture.

Tests

Conditions

This test consisted in building haproxy-git-6bcb0a84 using gcc-4.7.4, producing code for i386. In all tests, no I/O operations were made because the compiler, the sources and the resulting binaries were all placed in a RAM disk. The APU and the ARMs were running from a Formilux RAM disk.

Test method

HAProxy's sources matching Git commit ID 6bcb0a84 are extracted into /dev/shm. A purposely built toolchain based on gcc-4.7.4 and glibc-2.18 is extracted in /dev/shm as well. The "make" utility is installed on the system if not present. HAProxy is always built in the same conditions, TARGET is set to "linux2628", EXTRA is empty, CC points to the cross-compiler, and LD is set to "#" to disable the last linking phase which cannot be parallelized. Then the build is run at least 3 times with a parallel setting set sweeping 1 to the number of CPU cores, in powers of two, and the shortest build time is noted. Example :

root@t034:~# cd /dev/shm root@t034:shm# wget --content-disposition 'http://git.haproxy.org/?p=haproxy.git;a=snapshot;h=6bcb0a84;sf=tgz' root@t034:shm# tar xf haproxy-6bcb0a84.tar.gz root@t034:shm# tar xf i586-gcc47l_glibc218-linux-gnu-full-arm.tgz root@t034:shm# cd haproxy root@t034:haproxy# make clean root@t034:haproxy# time make -j 4 TARGET=linux2628 EXTRA= CC=../i586-*-gnu/bin/i586-*-gcc LD='#' ... real 25.032s user 1m33.770s sys 0m1.690s

Metrics

There are multiple possible metrics to compare one platform to another. The first one obviously is the build time. Another one is the inverse of the build time, it's the number of lines of code ("loc") compiled per second ("loc/s"). Then this one can be declined to loc/s/core, loc/s/GHz, loc/s/watt, loc/s/euro, etc. So the first thing to do is to count the number of lines of code that are compiled. By simply replacing "-c" with "-S" in haproxy's Makefile, the resulting ".o" files only contain pre-processed C code with lots of debugging information beginning with "#", and many empty lines. We'll simply get rid of all of this and count the number of real lines the compiler has to run through :

$ make clean $ find . -name '*.o' | xargs cat | grep -v '^#' | grep -v '^$' | wc -l 0 $ make -j 4 TARGET=linux2628 EXTRA= CC=/w/dev/i586-gcc47l_glibc218-linux-gnu/bin/i586-gcc47l_glibc218-linux-gnu-gcc LD='#' ... $ find . -name '*.o' | xargs cat | grep -v '^#' | grep -v '^$' | wc -l 458870

OK then we have an easily reproducible reference value : the test set consists of 458870 lines of code.

Devices

Machines involved in this test were 32 & 64 bit x86 as well as 32-bit ARMv7 platforms :

| Date | Machine | CPU | RAM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| family | model | freq (nom/max) | cores | threads | size | width | freq | ||

| 2014/08/05 | ThinkPad t430s | x86-64 | core i5-3320M | 2.6/3.3 GHz | 2 | 4 | 8 GB DDR3 | 64 | 1600 |

| 2014/08/05 | C2Q | i686 | Core2 Quad Q8300 | 3.0 GHz (OC) | 4 | 4 | 8 GB DDR3 | 128 | 1066 |

| 2014/08/05 | PC-Engines apu1c | x86-64 | AMD T40-E | 1.0/1.0 GHz | 2 | 2 | 2 GB DDR3 | 64 | 1066 |

| 2014/08/05 | Asus EEE PC | i686 | Atom N2800 | 1.86/1.86 GHz | 2 | 4 | 4 GB DDR2 | 64 | 1066 |

| 2014/08/05 | Marvell XP-GP | armv7/PJ4B | mv78460 | 1.6/1.6 GHz | 4 | 4 | 4 GB DDR3 | 64 | 1866 |

| 2014/08/05 | OpenBlocks AX3 | armv7/PJ4B | mv78260 | 1.33/1.33 GHz | 2 | 2 | 2 GB DDR3 | 64 | 1333 |

| 2015/01/18 | Jesusrun T034 | armv7/cortex A17 | RK3288 | 1.608/1.608 GHz* | 4 | 4 | 2 GB LPDDR2 | 32 | 792 |

| 2015/01/18 | AMD2 | x86-64 | Phenom 9950 | 3.0 GHz (OC) | 4 | 4 | 2 GB DDR3 | 128 | 1066 |

| 2015/01/18 | Cubietruck | armv7/cortex A7 | AllWinner A20 | 1.0/1.0 GHz | 2 | 2 | 2 GB DDR2 | 64 | 960 |

| 2016/02/07 | Pcduino8-uno | armv7/cortex A7 | AllWinner H8 | 1.8/1.8 GHz** | 8 | 8 | 1 GB DDR3 | 32 | ? |

| 2016/03/09 | ODROID-C2 | armv8/cortex A53 | Amlogic S905 | 1.536/1.536 GHz*** | 4 | 4 | 2 GB DDR3 | 32 | 912 |

| 2016/05/21 | NanoPI2-Fire | armv7/cortex A9 | Samsung S5P4418 | 1.4/1.4 GHz | 4 | 4 | 1 GB DDR3 | 32 | 1600 |

| 2016/05/21 | NanoPC-T3 | armv8/cortex A53 | Samsung S5P6818 | 1.4/1.4 GHz | 8 | 8 | 1 GB DDR3 | 32 | 1600 |

| 2016/07/26 | mqmaker MiQi | armv7/cortex A17 | RK3288 | 1.608/1.608 GHz**** | 4 | 4 | 2 GB DDR3 | 64 | 1056 |

* : the device claims to run at 1.8 GHz but the kernel silently ignores frequencies above 1.608 GHz!

** : the device doesn't let you configure this frequency despite being sold as a 2.0 GHz device!

*** : the device claims to run at 2.016 GHz but the kernel silently ignores frequencies above 1.536 GHz!

****: the device used to have the same problem but is now fixed upstream thanks to the vendor's reactivity!

Results

And the results are presented below in build time for various levels of parallel build.

| Date | Machine | Processes | Time (seconds) | LoC/s | LoC/s/GHz/core | Observations |

|---|---|---|---|---|---|---|

| 2014/08/05 | apu1c | 1 | 116.3 | 3946 | 3946 | |

| 2014/08/05 | apu1c | 2 | 59.4 | 7725 | 3863 | CPU is very hot |

| 2014/08/05 | apu1c | 4 | 64.0 | 7170 | 3585 | Expected, more processes than core |

| 2014/08/05 | t430s | 1 | 19.3 | 23776 | 7204 | 1 core at 3.3 GHz |

| 2014/08/05 | t430s | 2 | 10.9 | 42098 | 6790 | 2 cores at 3.1 GHz |

| 2014/08/05 | t430s | 4 | 9.1 | 50425 | 8133 | 2 cores at 3.1 GHz, 2 threads per core |

| 2014/08/05 | AX3 | 2 | 93.5 | 4908 | 1841 | running in Thumb2 mode |

| 2014/08/05 | XP-GP | 2 | 74.7 | 6143 | 1920 | running in Thumb2 mode |

| 2014/08/05 | XP-GP | 4 | 39.75 | 11544 | 1804 | running in Thumb2 mode |

| 2014/08/05 | EEE PC | 2 | 61.0 | 7522 | 2022 | |

| 2014/08/05 | EEE PC | 4 | 46.6 | 9847 | 2647 | 2 cores, 2 threads per core |

| 2015/01/18 | C2Q | 1 | 30.0 | 15296 | 5099 | |

| 2015/01/18 | C2Q | 2 | 16.1 | 28501 | 4750 | 2 cores on the same die |

| 2015/01/18 | C2Q | 4 | 8.77 | 52323 | 4360 | L3 cache not shared between the 2 dies. |

| 2015/01/18 | T034 | 1 | 74.7 | 6143 | 3820 | |

| 2015/01/18 | T034 | 2 | 41.7 | 11004 | 3422 | |

| 2015/01/18 | T034 | 4 | 25.0 | 18355 | 2853 | slow memory seems to be a bottleneck |

| 2015/01/18 | AMD2 | 1 | 26.4 | 17381 | 5794 | |

| 2015/01/18 | AMD2 | 2 | 13.8 | 33251 | 5542 | |

| 2015/01/18 | AMD2 | 4 | 7.47 | 61428 | 5119 | |

| 2015/01/18 | Cubietruck | 1 | 244s | 1881 | 1866 | |

| 2015/01/18 | Cubietruck | 2 | 141s | 3254 | 1614 | Cortex A7 is very slow! |

| 2016/02/07 | Pcduino8-uno | 1 | 128s | 3585 | 1992 | |

| 2016/02/07 | Pcduino8-uno | 2 | 64s | 7170 | 1992 | |

| 2016/02/07 | Pcduino8-uno | 4 | 39.2s | 11705 | 1625 | |

| 2016/02/07 | Pcduino8-uno | 8 | 28.5s | 16101 | 1118 | 25.7s really consumed, not enough files |

| 2016/03/09 | ODROID-C2 | 1 | 109.3s | 4198 | 2733 | running in aarch64 mode |

| 2016/03/09 | ODROID-C2 | 2 | 62.3s | 7365 | 2397 | A53 in aarch64 is approximately ... |

| 2016/03/09 | ODROID-C2 | 4 | 37.7s | 12171 | 1981 | ... equal to A9 in Thumb2 |

| 2016/03/12 | ODROID-C2 | 1 | 86.0s | 5336 | 3474 | running in Thumb2 mode (armv7 32 bits code)... |

| 2016/03/12 | ODROID-C2 | 2 | 47.5s | 9660 | 3145 | ... 27, 31 and 35% faster than in ARMv8 mode... |

| 2016/03/12 | ODROID-C2 | 4 | 27.96s | 16410 | 2671 | ... respectively for 1, 2 and 4 processes. |

| 2016/05/21 | NanoPI2-Fire | 1 | 124.8s | 3677 | 2626 | |

| 2016/05/21 | NanoPI2-Fire | 2 | 70.6s | 6500 | 2321 | |

| 2016/05/21 | NanoPI2-Fire | 4 | 48.9s | 9384 | 1676 | |

| 2016/05/21 | NanoPC-T3 | 1 | 104.6s | 4387 | 3134 | running in Thumb2 mode (armv7 32 bits code) |

| 2016/05/21 | NanoPC-T3 | 2 | 52.7s | 8707 | 3110 | |

| 2016/05/21 | NanoPC-T3 | 4 | 29.6s | 15502 | 2768 | |

| 2016/05/21 | NanoPC-T3 | 5 | 24.7s | 18578 | 2654 | |

| 2016/05/21 | NanoPC-T3 | 6 | 23.7s | 19362 | 2304 | |

| 2016/05/21 | NanoPC-T3 | 7 | 22.5s | 20394 | 2081 | Not enough files to maintain parallelism |

| 2016/05/21 | NanoPC-T3 | 8 | 21.8s | 21049 | 1879 | |

| 2016/07/26 | mqmaker MiQi | 4 | 45.2s | 10152 | 1578 | very slow by default, DDR3 runs at only 200 MHz! |

| 2016/07/26 | mqmaker MiQi | 4 | 22.6s | 20304 | 3157 | DDR3 at 528 MHz (echo p > /dev/video_pstate) |

Analysis on 2014/08/05

The Core2quad is outdated. It's exactly half as powerful as the new core i5 despite running at sensibly the same frequency. ARMs do not perform that well here. The XP-GP achieves the performance of one core of the C2Q using all of its 4 cores. Since it's running at half the frequency, we can consider that each core of this Armada-XP chip delivers approximately half of the performance of a C2Q at the same frequency in this workload. The Atom in the EEE-PC, despite a slightly higher frequency than the Armada-XP, is not even able to catch up with it. The APU platform is significantly more efficient at similar frequency than the Atom, given that it delivers per core at 1.0 GHz the same performance as the Atom at 1.86 GHz. However the atom can use its HyperThreading to save 25 extra percent of build time and reach a build time that the APU cannot achieve.

The conclusion here is that low-end x86 CPUs such as the Core i3 3217U at 1.8 GHz should still be able to achieve half of the Core i5's performance, or be on par with the C2Q, despite consuming only 17W instead of the C2Q's 77W. All x86 machines are still expensive because you need to add memory and sometimes a small SSD if you cannot boot them over the network. Given the arrival of new Cortex A17 at 2+ GHz supposed to be 60% faster than A9s clock-for-clock (Armada XP's PJ4B core is very similar to A9), there could be some hope to see interesting improvements there. If an A17 could perform as half of the i5 for quarter of its price (or half the price of a fully-equiped low-end i3), it would mean a build farm based on these devices would not be stupid.

Analysis on 2015/01/18

As expected, the Cortex A17 running at the heart of RK3288 shows a very good performance, and a single core performs about 80% faster than Armada XP's clock for clock. This is visible in the single core test which shows exactly the same speed as two cores on XP-GP, and the two-core test which is almost twice as fast on the RK3288. However, this 4-core CPU doesn't scale well to 3 nor 4 processes. The very likely reason is that not only the RAM is limited to a 32-bit bus, but it runs at 792 MHz only. In comparison, the Armada XP is powered by 1600 MHz in 64-bit, resulting in exactly 4 times the bandwidth. The 4-core run on the RK3288 was only 67% faster than the 2-core one. Linear scaling should have shown around 21 seconds for 4 processes instead of 25. It is possible that other devices running faster DDR3/DDR3L and more channels would not experience this performance loss. That said, this device is by far the fastest of all non-x86 devices here and is even much faster than all low-end CPUs tested so far. 4 cores of RK3288 give approximately the same build power as one core of an intel core i5 at 3 GHz. The device is cheap (less than 75 EUR shipping included) and can really compete with lwo-end PCs which still require addition of RAM and storage. For less than 300 EUR, you get the equivalent of four 3GHz intel cores with 8 GB of RAM, and it is completely fanless.

Comparing clock speed and core count, the Cortex A7 in the cubietruck is the slower, but it's still more or less on par with the Sheeva core in the Armada XP which is normally comparable to a Cortex A9. Here it suffers from a very low frequency (1.008 GHz) but possibly the big.Little designs offering it as a high-frequency companion to an even faster Cortex A15/A17 can bring some benefits. The Cortex A17 cores in the T034 are 26% faster than the Atom 2600 cores in the EEEPC. However, the Atom manages to catch up when it uses Hyperthreading. It's said that newer Atoms are about 20% faster so they could catch up with it without Hyperthreading, and manage to be twice as fast in their quad-core version.

The intel Core as found in the Core i5 is performing very well, but hyperthreading brings little benefit here (13%). Thus a CPU should be chosen with real cores (eg: newer code-core i5 vs dual-core quad-thread i3). The Phenom is performing quite well also, especially if we consider it's an old platform of the same generation as the Core2. Also, newer CPUs are proposed with many cores (4-8) and a high frequency (4+ GHz) leaving hopes for a very fast build platform.

Concerning the costs, An AMD diskless platform can be built for about 400 EUR with eight 4 GHz cores, 4 GB RAM, a motherboard and a power supply. Such a machine could theorically deliver around 160 kloc/s. The ARM-based T034 would require 9 machines to match that result, and would cost about 675 EUR + the switch ports. However it would take 10 times less space and would be fanless, silent and eat a third of the power. It seems reasonable to expect that a big.Little machine running 4 cortex 15 cores at 2 GHz and 4 cortex A7 cores at 1.3 GHz could reach 25 kloc/s. Such a machine could be made for around 150 EUR using a Cubietruck 4 or an Odroid XU3 board. But compared to the other solutions, that's even more expensive (around 900 EUR for 160 kloc/s). Maybe entry-level Atom-based motherboard with an external power supply can compete with the AMD if one manages to find them at an affordable price.

In this test, the AMD was the fastest of all systems, and the ARM was the fastest fanless system and also the one delivering the highest throughput per cubic centimeter.

Analysis on 2016/02/07

The PCduino8-uno is quite an interesting device. For $49, you get 8 cores running at 1.8 GHz (it's advertised as 2.0 GHz but that's wrong, cpu-freq refuses any frequency above 1.8 GHz). The processor is very similar to the A83T. It supports DVFS (dynamic voltage and frequency scaling) and automatically adjusts its frequency based on the temperature. This is a benefit for hardware vendors who can easily overclock CPUs and advertise the highest possible frequency, at the expense of the consumers who find them slow because these devices throttle themselves. The PCduino8-uno is no exception, after 2 or 3 seconds, it slows down to only 4 cores running at 480 MHz, and completely stops the 4 other ones. The temperature thresholds are extremely low (it starts to throttle above 50 degrees). This thermal throttling can be disabled, but at exactly 100 degrees, the device will shutdown, making it really useless for anything. By writing to the proper CPU register, it's possible to also diable the thermal shutdown. The CPU then runs find at up to 121 degrees, and froze at 128. Installing a 4cmx4.5cm heatsink on this device was not easy, but adhesive heatsinks of 3.5x3.5cm can easily be found on the net. With this heatsink, the temperature rarely exceeds 80 degrees. This combined with a disabled thermal throttling is enough to let the device *really* work with all 8 cores at 1.8 GHz.

The test with 4 processes shows that the memory bandwidth starts to limit the build performance. According to the datasheets, the DRAM bus is only 32 bits, which starts to be a bit short for 8 build processes in parallel. No information was found on the DRAM frequency though it's expected to be between 800 and 1066 MT/s.

The test with 8 processes exhibited an issue that never appeared in previous tests and which is worth considering for future hardware choices. It happens that haproxy contains 4 very large C files, each consuming between 8 and 20 seconds to build. So when these files are picked in the middle of the build process, they can be left alone on one or two cores with the other ones idle waiting for them to finish. The only way to improve the situation is to force these files to be built first so that the other smaller ones can be built in parallel. Regardless on the number of cores or available machines, it indicates that the build time using such hardware will never go below 20 seconds.

This shows the importance of raw performance. It's also interesting to note that at similar frequency, the RK3288 in the T034 is exactly as fast as the H8 in the PCduino8-uno but with half of the cores. Thus it will deliver the same build performance with half of the latency (ie with enough nodes it will be possible to go down to 10 seconds instead of 20). But for workloads involving many small files, the PCDuino8-uno is 30% cheaper, though it requires some hardware adjustments.

Other very cheap CPUs exist, such as the H3 (quad core 1.2 GHz). Some are sold overclocked at 1.6 but cannot sustain this frequency when used. Users report that 1.2 is the maximum stable frequency with a heatsink. When the price of a power supply, a microSD card, and a switch port are added, they're probably not interesting anymore, being 3 times slower than the H8 at 1.8 GHz for around $10-$15.

New tests with distcc on the Linux kernel on 2016/02/07

Since we don't have enough small files in haproxy to maintain all cores busy during most of the time, a new test was run with distcc running on the t430s, involving various combinations of itself, the pcduino8-uno and the t034.

A first test showed that the t034 would periodically segfault on the Linux kernel and refuse to run distccd on the Android kernel, apparently due to selinux preventing a non-root process from creating a socket. The crashes were caused by the Linux kernel running the memory at 456 MHz while the Android kernel was running it at 396 MHz. A new device tree was made to fix this.

| Date | make -j# | #Processes per machine | build time(s) | %idle per machine | ||||

|---|---|---|---|---|---|---|---|---|

| t430s | pcduino8 | t034 | t430s | pcduino8 | t034 | |||

| 2016/02/07 | 4 | 4 | 0 | 0 | 128.9 | 0% | - | - |

| 2016/02/07 | 12 | 4 | 8 | 0 | 102.8 | 0% | 8-25% | - |

| 2016/02/07 | 12 | 3 | 9 | 0 | 105.5 | 25% | 0% | - |

| 2016/02/07 | 12 | 2 | 10 | 0 | 111.5 | 35% | 0% | - |

| 2016/02/07 | 16 | 4 | 12 | 0 | 100.4 | 0% | 0% | - |

| 2016/02/07 | 8 | 0 | 8 | 0 | 280.2 | 85% | 0% | - |

| 2016/02/07 | 4 | 0 | 0 | 4 | 263.7 | 85% | - | 9% |

| 2016/02/07 | 6 | 0 | 0 | 6 | 258.4 | 85% | - | 0% |

| 2016/02/07 | 16 | 0 | 10 | 6 | 138.1 | 45% | 0% | 0% |

| 2016/02/07 | 18 | 4 | 9 | 5 | 84.9 | 0% | 1% | 1% |

| 2016/02/07 | 19 | 3 | 10 | 6 | 87.7 | 5% | 0% | 0% |

| 2016/02/07 | 20 | 4 | 10 | 6 | 81.5 | 0% | 1% | 1% |

| 2016/02/07 | 24 | 4 | 12 | 8 | 84.2 | 0% | 1% | 1% |

As can be seen, the cortex A17 in t034 delivers exactly the same build time with half of the core as the cortex A7 in the pcduino8-uno, so for this workload both machines are interchangeable, thus the pcduino8 is cheaper for the same level of performance, and that these two machines combined achieve almost the same level of performance as the t430s alone. When the t430s is used only by distcc, it's pretty clear that it can easily handle about 6 such machines before being saturated. Thus it should be possible to make the build time go as low as about 45 seconds with 6 machines (it's 2mn09 natively). 6 pcduino8 will cost about $300 plus the microSD cards and the heatsinks and power supplies. This can be cheaper than an x86 equivalent, consume less power and remain fanless. It is expected that the Odroid XU4 board would deliver approximately 1.5 times the performance of these machines for 1.5 times the cost of the pcduino8. It would also have a much lower latency than the pcduino8, making it suitable to build projects using large files. Such an option might be really worth considering in the future. New cheap boards involving cortex A53 (64-bit) still run at too low a frequency to be of any use here.

By making use of the "pump" feature of distcc, it might be possible to further increase the number of nodes, at the expense of a bit more constraints.

Hardware that deserves being studied - 2016/02/20

FriendlyARM proposes a 1.4 GHz quad-A9 for only $23 with 1 GB RAM and native gigabit connectivity. This board while theorically slower than most of competitors above is only 1/3 of the price of the quad-A17 for half of the power. It might represent an interesting device when lots of files have to be built (eg: Linux kernel).

Tests conducted on FriendlyARM platforms - 2016/05/21

We finally ordered a few FriendlyARM NanoPI2 boards. And as friendly as they are, they even offered us two extra boards for free, which is awesome! One is the NanoPC-T2 which runs on the exact same CPU as the NanoPI2 (4xCortex A9 Samsung S5P4418 at 1.4 GHz), and the other one is a very new 64-bit NanoPC-T3 (8xCortex A53 Samsung S5P6818 at 1.4 GHz). This last one is very new, the image was not yet on the site when the board arrived. The board is almost exactly the same but with a different CPU. Since the new board is marked "NanoPC-T2/T3", we suspect that the same board will be used for future batches (the CPUs have identical pinouts).

As anticipated, the Cortex A9 is not very fast. Clock for clock, it's even a bit slower than the XP-GP board which runs with 64-bit memory at 1600 MHz. But the board is amazingly cheap, really small and extremely convenient to build a stack of machines. Two of them fit in the hand, and there are mounting holes supporting M3 screws. The power and RJ45 are opposed and there's no need for any side connector, so it's very easy to build extremely compact machines with these boards, much more than it is with the T034 board in fact.

It was noted in some memory bandwidth tests that the simple fact that the video controller is enabled consumes 25% of the memory bandwidth. The only way we found to disable it right now is to freeze lightdm and kill X11. Otherwise even in console mode the framebuffer runs at a high resolution wasting memory bandwidth. The tests above were run under such optimal conditions. We'll have to rebuild a kernel completely disabling HDMI support to get optimal results in the future.

The NanoPC-T3 is an interesting beast. Per core, it delivers the same performance level as the Odroid-C2 but at a 30% lower frequency (1.4 GHz instead of 2.0 GHz), while it's supposed to be running on the same A53 cores. Maybe the memory controller makes a difference here. (Update 2016/07/09: the Odroid-C2 really runs at 1.536 GHz, see below). And with all 8 cores combined, it's the first ever non-x86 board we see here which crosses the 20s build time limit (19.2s) when building for x86_64. It becomes obvious that the memory bandwidth becomes a bottleneck with so many cores but despite this it's faster than other boards. Initially we thought the limited scaling at high process counts was caused by limited memory speeds, but given that the user time almost doesn't change (2m02 to 2m05) between 6 and 8 processes, it only means the CPUs simply don't have any extra job to run. The CPU would definitely deserve a bit of overclocking to 1.6 GHz to keep up with the RK3288 on similar core counts. It could then be used both for small and large projects without hesitating.

There is an important point we forgot to mention above regarding the heat. Both boards stay really cool, but during builds they start to heat a little bit. The 6818 includes a thermal sensor which the 4418 doesn't have. There is some form of automatic thermal throttling once the temperature reaches 87 degrees C. The 4418 doesn't appear to have any such feature, though in practise we've seen it occasionally report 800 MHz instead of 1400 for a few seconds during build despite being forced in "performance" governor. Using a very small heatsink the size of the CPU and 6mm high is enough to keep the CPUs cool below 70 degrees during build so that it never throttles. It is important to use low profile heatsinks when you want to stack the boards.

We're now impatient to see a NanoPI3-Fire board equipped with the S5P6818. It would by far be the best board to build a server farm. The board design is so convenient, it doesn't contain any useless chips, and it's reasonably cheap. In the mean time we need to figure if it's possible to run these CPUs at a higher frequency. Since we're not using the GPU at all we can reuse a part of the thermal envelope for raw CPU power.

Update 2016/07/07: there's now a NanoPI-M3 with the same components and characteristics for only $35. It's very cheap for that fast of a board. We ordered one but couldn't resists installing it in a robot so it's not part of the farm!

A new RK3288 board to come : the MiQi board - 2016/07/07

MQMaker makes the MiQi board, an RK3288-based board. It's slightly larger than the T034, but has mounting holes, eMMC on board, and uses the two memory channels from the SoC. Also it's cheaper at $40. We've ordered one to see how it performs.

Very interesting discoveries about cheating vendors - 2016/07/09

A while ago we've noticed that some devices were reporting a cpufreq value higher than reality. The first one to show this was the T034, and RK3288-based HDMI stick. It advertises 1.8 GHz, but showed exactly the same performance at 1608, 1704 and 1800 MHz, proving that it was running at 1608 MHz instead. This was later confirmed when digging into the code where there is a "safety lock" to prevent the CPU from going above 1608 MHz (found as "SAFETY_FREQ" in commit 611037b "rk3288: set safety frequency" of the Firefly kernel that everyone uses).

In several newer versions of the T034 sold as CS008, you can randomly find fake DDR3 RAM chips which are in reality DDR2. It is visible because some of them are marked K4B4G1646 which should in fact be a 512MB DDR3 16bit chip of a completely different package format, the true one is K3P30E00M (2GB DDR2 32bit).

Recently while testing the FriendlyARM NanoPI3, we found it quite strange that being at 1.4 GHz, it was only very slightly slower than the Odroid-C2 supposed to be at 2.0 GHz when using the same number of cores (both Cortex A53). There is no doubt that the Odroid-C2 reports various frequencies among which 1000, 1296, 1536, 1752 and 2016 MHz. Doing some careful measurements will reveal that on the Odroid-C2, 1536, 1752 and 2016 MHz have exactly the same performance. Additionally, when running the ramlat utility, it will show that the L1 cache speed matches the CPU's frequency for all frequencies up to 1536 MHz and that for 1752 and 2016 it remains exactly equal to 1536. Thus that explains why the Odroid-C2 is so slow. The Cortex A53 isn't that slow in fact as proven by the NanoPI3, it's just running at too low a frequency. At this time we don't know whether it's a bug, it was added as a protection against overheating or it was made as a way to boost sales by advertising a higher frequency than reality. The numbers in the table above have been updated to reflect reality.

Introducing the BogoLocs metric - 2016/07/09

Some tests run with the ramlat utility showed that the build time almost solely depends on the L1 cache speed, then a little bit on the L2 cache speed and then finally on the DRAM speed. It does not exactly depend on the memory bandwidth but rather latency. Some will say there's nothing new here, all those of us having assembled a very fast PC for development know that it is of uttermost importance to select the lowest latency DRAM sticks.

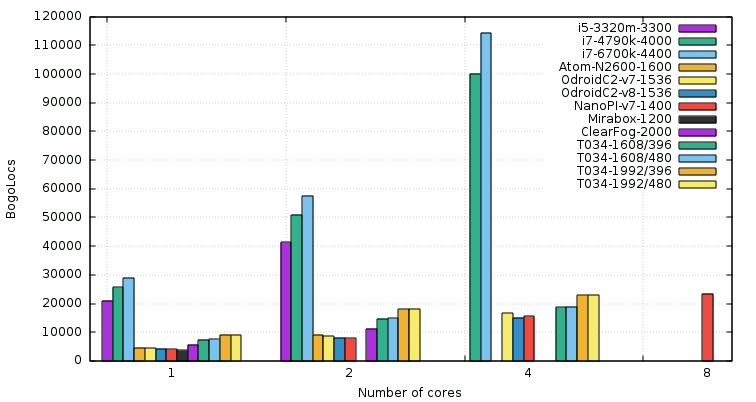

But for the first time we have a model which reports almost the same performance numbers as the measures above by just looking at L1, L2 and DDR latency. Of course this is bogus though not that much. Thus we created a new unit for this metric, the "BogoLocs" (for Bogus Lines Of Code per Second).

The principle of the measurement relies on a few observations. The first one is that a compiler walks across a lot of linked lists and trees. Such operations systematically require one pointer access to get the next element, and at least one read to study the data and know where to stop. Such data are often grouped together within a same cache line or are quite close. Linked list elements on the other hand have absolutely no relation and generally produce a random walking pattern across the memory space. The second observation is that most of the manipulated data are found in the CPU caches due to the fact that the compiler manipulates adjacent objects when it tries to optimize code. It then makes sense to consider that with a high locality, the both L1 cache and L2 cache hit ratios are high.

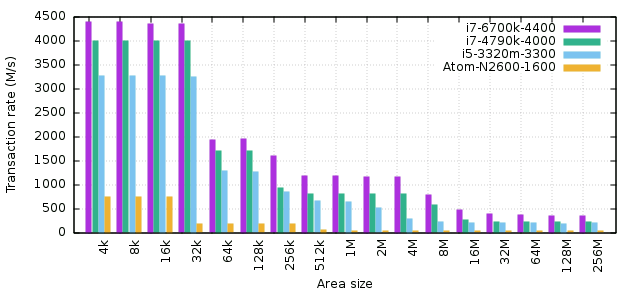

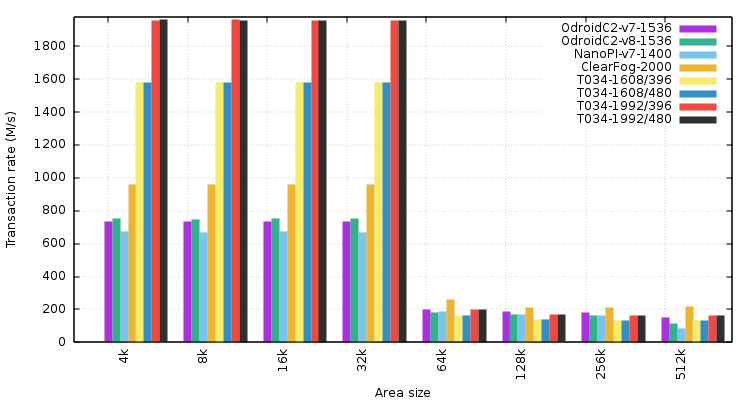

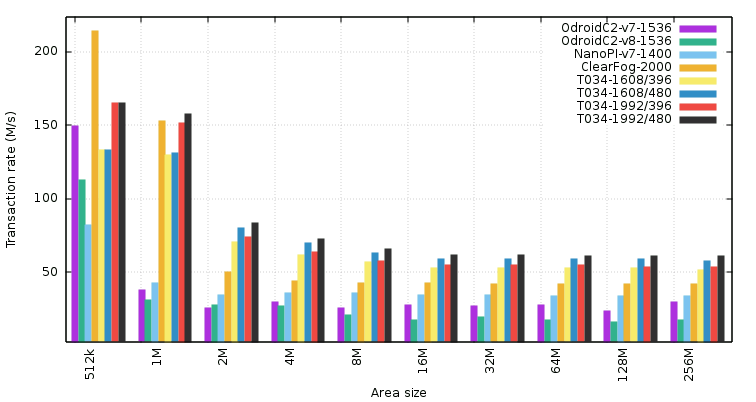

We have graphed the memory latency metrics for a number of devices, most of them at stock frequency, some overclocked. The T034 is interesting because both its CPU and RAM were independently overclocked to show the effect of each. The graphs below report the transaction rate in millions per second for each device at various memory area sizes. Due to the number of columns, the first graph shows only some x86 devices which clearly exhibit the L1, L2, L3 and DDR performance, and the next graphs will show some ARM devices's cache performance and a zoom on their respective DDR performance.

Performance on x86 across all the memory area sizes :

Performance on ARM for memory areas fitting into L1 and L2 :

Performance on ARM for memory areas fitting into external RAM. Most devices have 512kB of shared L2 cache, except the T034 (RK3288) which has 1MB shared :

The x86 graph shows pretty visibly the L1, L3 and L3 cache sizes of all tested CPUs. It is worth noting that the L2 cache is per-core and is very fast on x86, with around 2 words read every 2 CPU cycles. The performance penalty when hitting the L2 is thus very minimal. This probably explains why the SLZ compressor performs so well. In general, x86 achieves in L2 areas as large as 256kB the performance that the best ARM chips achieve in L1 areas of 32kB. And similarly the performance achieved by x86 in DRAM is as good as or even bettern than what the best ARM chips achieve in L2 cache here.

The ARM cache performance graph shows that L1 cache speed is only dependant on the CPU's frequency. For Cortex A9 and A53, the L1 cache allows to fetch two words every two cycles (and it was verified that one word is fetched every cycle). For the Cortex A17, two words are fetched every CPU cycle. This is a nice improvement. The T034 runs at two CPU frequencies, each tested at two DRAM frequencies. It's obvious here that the DRAM frequency has zero impact in all cache-related tests. The L2 cache latency is not good at all on these ARM devices, as can be seen between 64kB and 512kB. It costs 8 CPU cycles on the Cortex A53, 9 on the A9 found in the ClearFog, and 12 in the A17 found in the T034! This explains why the ClearFog (A9 at 2.0 GHz) now performs significantly better than the T034 (A17 at 1.6/2.0).

Something was not shown here, the L1 cache performance of Cortex A9 and A53 occasionally showed a much lower performance for the 32kB area size so the tests had to be re-run. Some mentions were found in some datasheets about such caches to be 4-way set associative and physically addressed. Since we don't know which bits are used to address it nor where the process is mapped, it's very possible that 1/4 of it experiences some eviction when addresses conflict between the first part and the last one and that it should be considered as "24 to 32kB" instead when it comes to consecutive addresses. It should not be a problem in a compiler where the access patterns are more random and never exactly 32kB so the tests were re-run to get the correct numbers when needed.

Regarding the ARM DRAM performance, it depends on a lot of things. First, the DRAM frequency shows its impact for the first time here on the T034 once the dataset doesn't fit in the 1MB L2 cache anymore. Second, the mode in which the CPU works (ARMv8 or v7) seems to have a big impact. While it doesn't make much sense, in fact it can impact the instruction sequence used to fetch the data, and possibly the ability for the cache to speculate some reads. In ARMv7-thumb2 mode, the "LDRD" instruction is used to read 64-bit pointers. It looks more efficient than what is used in v8. The ClearFog employs DDR3L at unspecified frequency. Being an "L" version it may have higher access times than the DDR2 found on the T034. Overall, the performance here is 4-10 times lower than on x86, so it is very important to minimize the risk of hitting DRAM whenever possible.

And now the BogoLocs

By combining just the numbers above we can reproduce the build performance numbers across all architectures. For this we pick the L1 cache transaction rate from the 8kB line (it's stable across all products), the L2 cache rate from the 128kB line (stable across all products), and the DRAM rate from the 16MB line (stable as well). By considering 96% hit ratio in L1, 92% hit ratio in L2, an aggregate hit performance that cannot exceed 1/miss times the next layer, we get the following metric which approximates the number of lines of code per second measured on real devices.

In the end it shows that the L1 speed is critical (directly depends on frequency), and that the DRAM speed is very important as well as it can quickly limit the shared L2 speed when the miss rate is too high and multiple cores are hammering it in parallel. At this point it's unknown whether using devices with 64-bit memory bus will improve the performance or not, but given that the dual-channel in PCs provide them with 128-bit paths, and their performance is much higher, we'd be inclined to bet on this.

Introducing a new player - MiQi board - 2016/07/26

This month, mqmaker started shipping the cheapest ever RK3288-based board, the MiQi board. It caught our attention because the photos clearly show that this board uses 64-bit memory, thanks to the SoC's dual channel memory controller. So it was a great opportunity to see how such a board would perform with twice the memory bandwidth compared to other ones like the T034/CS008. So we ordered the small and cheap one ($35) and finally got a large one (2GB RAM/16GB flash) with all accessories (case, cable, heatsink) as the small ones are not built yet. That's really nice and professional from the vendor to honor orders like this.

First, like most other RK3288-based boards making use of the original rockchip kernel, the kernel locks it at 1.608 GHz despite reporting higher frequencies. It's easy to tell based on any performance test showing the exact same numbers at 1.608 and 1.800. Initial ramlat tests showed extremely low DDR performance (less than half that of the Odroid-C2) and the first build test was very slow as well (45 seconds when about 25 were expected based on the T034). Kernel messages showed that the RAM switched to 200 MHz 10 minutes after boot. Simply issuing the famous "echo p > /dev/video_pstate" changed it to 528 MHz and exactly doubled the build performance and moved this board from the slowest one to the fastest one. And that's only at 1.6 GHz. Also the LoC/s/GHz at 4 cores is the highest of all boards. This proves as we anticipated and showed in the bogolocs tests above that the DDR performance is utterly critical to the build performance, and that this board can do well as it's well equipped. We'll have to rebuild a kernel removing this frequency limit and we may even explore higher DDR3 speeds (we don't know yet the supposed DDR3 chips frequency), and maybe send back a few patches to the vendor who provides all sources. However for now, assuming the $35 price tag is maintained, it could very well be the highest performing option at quite a low price. And yes, the board has mounting holes so we may stack some of them :-)